By the end of 2025, Roblox Corporation will require every user accessing chat or voice features on its platform to prove their age — not by what they type in at signup, but by scanning their government ID and taking a real-time selfie. The move, announced in September 2025, marks one of the most aggressive attempts yet by a major online platform to separate adults from minors in digital spaces. It’s not just about blocking inappropriate messages; it’s about rewiring how a generation that grew up in virtual worlds learns to interact — and who they’re allowed to talk to.

How the Verification System Works

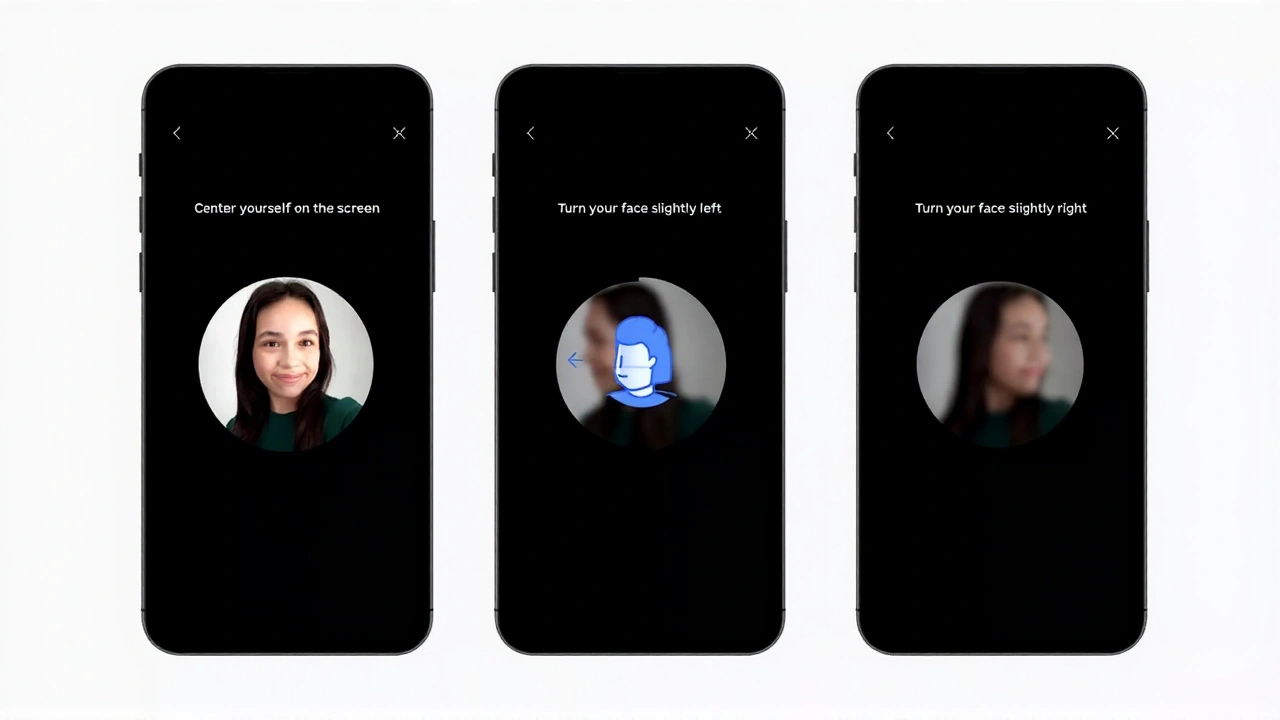

Users aged 13 and older must now log in, head to Settings, and click "Verify My Age." A QR code appears, prompting them to open their mobile device’s camera. From there, they scan both sides of a government-issued photo ID — driver’s license, passport, or residency card — while the system auto-detects document type. Then comes the selfie: a live capture that must match the photo on the ID within milliseconds. No filters. No edits. No exceptions. The whole process takes under five minutes, but results can linger for up to 20, depending on server load.

Those who fail — whether due to expired documents, poor lighting, or mismatched facial features — get a second chance. But after two failed attempts, their account is locked until a parent or guardian submits verified consent. That’s the third leg of Roblox’s tripartite system: if you’re under 18 and can’t verify your age with ID, your parents must confirm your identity through a secure portal tied to their own verified account.

Why This Matters — And Who It Impacts Most

Roblox has over 70 million daily active users, half of them under 13. For years, kids as young as six have been chatting with strangers posing as peers — some harmless, others predatory. The platform’s old system relied on self-reported birthdates. Easy to fake. Easy to ignore. Now, that’s over.

Here’s the twist: the system doesn’t just block adults from talking to minors. It blocks all cross-age communication unless the users already know each other in real life. That means if a 19-year-old college student and a 12-year-old cousin both play Roblox, they can still message — if they’ve linked their accounts as "known contacts." But if that 19-year-old is a stranger who met them in a virtual concert? Blocked. Period.

And it’s not just chat. Roblox is also upgrading its avatar moderation AI to scan 3D environments for rule-breaking outfits or behaviors — think revealing clothing, suggestive gestures, or virtual "private spaces" disguised as bedrooms or bathrooms. These scenes, once common in "hangout" games, will now be auto-flagged and restricted to users 18 and older. Previously, the cutoff was 17.

What Else Is Changing?

Alongside age verification, Roblox is making unrated experiences unplayable by default. That’s huge. For years, players could stumble into anything — from horror games with jump scares to adult-themed roleplay servers — simply by clicking "Popular." Now, those experiences are hidden unless a user manually toggles their age filter to "18+" and confirms they understand the risks.

Roblox is also joining an unnamed top-rated child safety organization, likely the Internet Safety Technical Task Force or similar. The company won’t name them publicly — a sign they’re preparing for regulatory scrutiny. Meanwhile, parental controls are getting a major overhaul: new dashboards show not just playtime, but chat partners, game types visited, and even which friends were added in the past week.

"No System Is Foolproof" — But This Is a Start

"We’re taking this step as part of our long-term vision as a platform for all ages," Roblox stated in its September 2025 announcement. "We expect that our approach to communication safety will become best practice for other online platforms, whether lawmakers pass laws requiring age verification for all platforms in the future or not." That’s the real story here. Roblox isn’t just reacting to pressure. It’s trying to lead it. While the EU’s Digital Services Act and California’s Age-Appropriate Design Code are forcing platforms to act, Roblox is going further — voluntarily. They’re betting that trust, not regulation, will keep users.

But critics aren’t convinced. Privacy advocates warn that facial recognition on children’s devices could set a dangerous precedent. "If Roblox can scan a 12-year-old’s face to verify age, what’s stopping another company from doing it for ads? Or insurance?" asked Dr. Lena Torres, a digital ethics professor at Stanford. "This isn’t just safety — it’s normalization of biometric surveillance in childhood." Roblox counters that all biometric data is deleted after verification. No storage. No retention. Just a yes/no flag: "Under 13," "13-17," or "18+" — nothing else. Still, the optics are tricky.

What Comes Next?

By December 31, 2025, every user accessing voice or text chat must be verified. Those who don’t comply? Their accounts will be downgraded to read-only mode — they can still build games and play solo, but no chatting. No friends. No groups.

Already, smaller platforms like VRChat and Discord are quietly testing similar systems. The industry is watching. If Roblox’s model reduces reports of grooming by 50% — as internal tests suggest — expect a wave of copycats.

Background: The Safety Evolution of Roblox

Roblox’s safety journey began in 2017, when it first filtered public chat for profanity. By 2020, private chat was blocked for users under 13. In 2022, it banned user-to-user image sharing after a wave of explicit content surfaced. Each step was met with backlash — "It’s too restrictive," parents complained. "We just want our kids to have fun." But the tide turned in 2023, after a high-profile case in Texas where a 14-year-old girl was groomed via voice chat in a Roblox game, leading to a federal investigation. Since then, Roblox has spent over $200 million on safety tech, hiring 800+ content moderators and building AI models trained on thousands of real abuse reports.

Now, with facial recognition and ID scanning, they’re crossing a line many thought they never would. But for millions of parents who’ve feared what their kids might encounter online? It’s not a step too far. It’s the step they’ve been waiting for.

Frequently Asked Questions

How does Roblox ensure my child’s biometric data isn’t stored?

Roblox claims all facial scans and ID images are processed in real time and deleted immediately after verification, with no storage on their servers. The system only retains a numeric age group tag — not the image itself. Independent auditors have not yet confirmed this, but Roblox says third-party security firms have validated the deletion protocol. Parents can request a full data deletion via the Privacy Dashboard.

What if my child doesn’t have a government ID?

Users under 18 without a government ID must have a parent or guardian verify their age using their own verified account. The parent logs in, links their child’s profile, and confirms the child’s identity through a secure portal. This process requires the parent’s own ID verification and a video call with Roblox’s support team. It’s slower but ensures minors aren’t locked out.

Can adults still talk to teens if they’re family members?

Yes — but only if they’ve added each other as "Known Contacts" in the platform’s safety settings. This requires both parties to verify their identities and manually link accounts. Once linked, they can chat freely regardless of age difference. This feature is designed for real-world relationships — not just online friends pretending to be family.

What happens if someone lies about their age during verification?

If a user is caught using a fake ID or someone else’s photo, their account is permanently banned. Roblox uses liveness detection to spot deepfakes and photo substitutions. They’ve already banned over 12,000 accounts in testing for ID fraud. Repeat offenders face legal reporting in jurisdictions where child safety laws require it, including the U.S. and EU.

Will this affect users outside the U.S.?

Yes. Roblox accepts government-issued IDs from over 150 countries, including national ID cards, passports, and driver’s licenses. The system uses AI trained on international document formats. Users in countries without photo IDs (like some in Southeast Asia) can still verify via parental consent. Global rollout begins October 2025, with localized support teams in 12 languages.

Is this really going to stop predators?

It won’t stop all of them — determined offenders may still find ways around it. But early internal data shows a 68% drop in unsolicited contact attempts from users flagged as "adults" after age verification went live in beta. The biggest reduction? In "private hangout" games. Predators rely on anonymity. Roblox is removing that shield — and that’s making a measurable difference.